The 1st International Workshop and Challenge on Disentangled Representation Learning for Controllable Generation

ICCV 2025 DRL4Real Workshop

01 Overview

Disentangled Representation Learning for Controllable Generation (DRL4Real)

Disentangled Representation Learning shows promise for enhancing AI's fundamental understanding of the world, potentially addressing hallucination issues in language models and improving controllability in generative systems. Despite significant academic interest, DRL research remains confined to synthetic scenarios due to a lack of realistic benchmarks and unified evaluation metrics.

ICCV 2025 DRL4Real Workshop aims to bridge this gap by introducing novel, realistic datasets and comprehensive benchmarks for evaluating DRL methods in practical applications. We will focus on key areas including controllable generation and autonomous driving, exploring how DRL can advance model robustness, interpretability, and generalization capabilities.

02 Dataset (used for the competition)

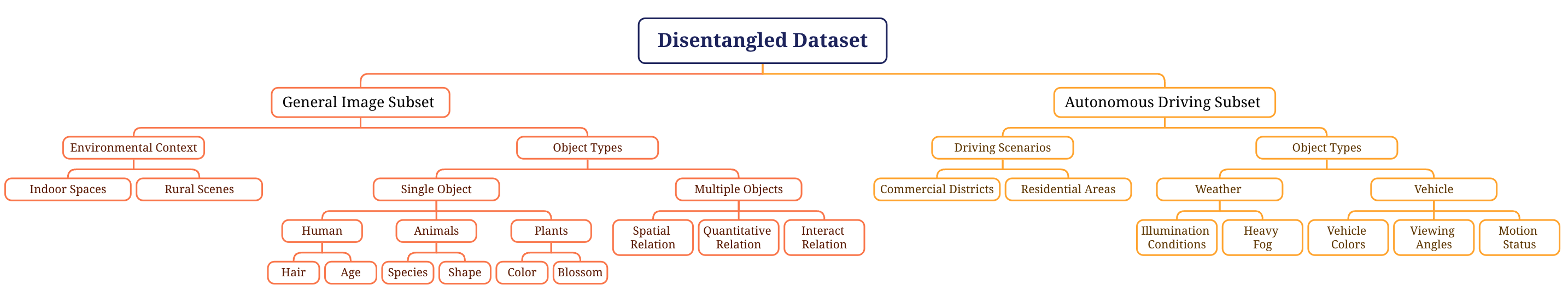

The DRL4Real dataset is specifically designed for disentangled representation learning and controllable generation, covering multiple realistic scenarios and diverse disentanglement factors. It consists of two main categories aligned with our competition tracks:

- General Image Subset It Contains approximately 14,000 images across 13 distinct categories: car, cat, cattle, dog, eagle, facial, flower, fruit, horse, pigeon, tree, vegetable, plus time-lapse photography. Images in this subset are partially synthesized using text-to-image generative models, while others are derived from controllable generation video sources or captured from real videos.

- Single-factor variations: Our dataset provides both text descriptions and corresponding images showing factor changes.

- Multi-factor variations: We primarily provide corresponding text descriptions with a limited number of images as examples.

Single-Factor Manipulation Examples

Here are demonstration videos showing examples of single-factor manipulation:

Car Color Manipulation

Hair Color Manipulation

Rose Color Manipulation

Multi-Factor Manipulation Examples

Here are demonstration images showing an example of multi-factor manipulation (vase appearance and hand position):

Flowers in Vase

Flowers in Hand

Vase in Hand

- Autonomous Driving Subset A vehicle-centered dataset featuring 5 camera perspectives:

- 4 outward-facing cameras positioned around the vehicle

- 1 top-down overview camera

Each sample consists of 100 frames extracted from videos captured by these 5 cameras, with simultaneous variations in 8 key factors: vehicle speed (accelerate, constant, decelerate), weather conditions (clear, fog, light rain), lighting, and other environmental factors. The dataset includes 3 distinct vehicle types and more than 5 background scenarios, systematically generated using the CARLA simulator, totaling 20,000 high-quality images.

Autonomous Driving Example

Here is a demonstration video showing an example from the autonomous driving dataset:

Autonomous Driving Scene

Dataset Download

Access our comprehensive dataset for disentangled representation learning and controllable generation:

Download DRL4Real Dataset03 Challenge

Our competition is designed to advance the field of disentangled representation learning through realistic benchmarks and comprehensive evaluation metrics. The challenge is divided into two main tracks:

Competition Tracks

1. Single-Factor Track

This track provides over 13,000 images covering scenarios mentioned in the dataset and corresponding text descriptions. Participants must use disentanglement models for this track, such as β-VAE, GEM, StyleGAN, EncDiff, CL-Dis, STA.

Evaluation Metrics

- Image Quality (50%): Measured using Fréchet Inception Distance (FID)

- Latent Variable Disentanglement (50%): Evaluated using Bidirectional DCI, which extends the traditional DCI metric by also measuring how perturbations in real images affect the corresponding latent variables in the encoder

For more detailed technical information about the metrics, please see this PDF (It will be released soon)

2. Multi-Factor Track

This track is divided into two sub-categories, each with two separate leaderboards:

2.1 General Image Dataset

- Overall Leaderboard: Open to all methods. Evaluation uses LLM to assess:

- Evaluate intensity scoring across a set of images

- Ratio of intended attribute changes to unintended changes (closer to 1 is better)

- Disentanglement Leaderboard: Only for disentanglement models, using the same metrics as the Single-Factor Track

2.2 Autonomous Dataset

- Overall Leaderboard: Open to all methods. Evaluation uses Structural Similarity Index Measure (SSIM) to compare reconstructed frames with ground truth

- Disentanglement Leaderboard: Only for disentanglement models, using the same metrics as the Single-Factor Track

For more detailed technical information about the metrics, please see this PDF (It will be released soon)

Prizes for Winning Teams:

- First Place: $800 USD (or equivalent prizes)

- Second Place: $400 USD (or equivalent prizes)

- Third Place: $200 USD (or equivalent prizes)

Winners will be invited to submit challenge papers to the DRL4Real 2025 Workshop. Accepted papers will be published in the ICCV 2025 Workshops Proceedings.

Important Dates

- May 31, 23:59:59 AOE: Workshop Announcement and Training & Validation Dataset Release

- June 23, 23:59:59 AOE: Final Test Dataset Release

- June 30, 23:59:59 AOE: Competition Submission Deadline

- July 3, 23:59:59 AOE: Notification of Competition Results

- July 10, 23:59:59 AOE: Challenge Paper Deadline

- July 10, 23:59:59 AOE: Workshop Paper Submission Deadline

- July 11, 23:59:59 AOE: Notification of Paper Acceptance

- August 15, 23:59:59 AOE: Camera Ready Paper Deadline

- October 19: Workshop and Competition Results Presentation at ICCV 2025

For more details about the challenge, please visit the Challenge page.

04 Call for Papers

The DRL4Real Workshop aims to bring together researchers and practitioners from academia and industry to discuss and explore the latest trends, challenges, and innovations in disentangled representation learning and controllable generation. We welcome original research contributions addressing, but not limited to, the following topics:

- Disentangled representation learning

- Controllable image and video generation

- Scene understanding and generation in autonomous driving

- Multi-modal disentanglement (image, video, text)

- Disentanglement in generative models (GANs, VAEs, Diffusion Models)

- Applications of disentangled representations in real scensarios

- Few-shot and zero-shot learning with disentangled representations

- Integration of large language models for controllable generation

Submission Details

Papers will be peer-reviewed and comply with the ICCV 2025 proceedings style, format and length. The camera-ready deadline aligns with the main conference. Accepted papers must be registered and presented to ensure their inclusion in the IEEE Xplore Library. For details, refer to the ICCV 2025 Author Guidelines.

05 Invited Speakers

Coming Soon

06 People

Organizers

Qiuyu Chen

Ph.D. Candidate

Eastern Institute of Technology & Shanghai Jiao Tong University

Ziqiang Li

Ph.D. Candidate

Eastern Institute of Technology & Shanghai Jiao Tong University

Yuntao Wei

Ph.D. Candidate

Eastern Institute of Technology & The Hong Kong Polytechnic University

Assistance

Shengyang Zhao

Research Associate

Institute of Digital Twin, EIT, Ningbo, China

Xin Li

Postdoc Research Fellow

University of Science and Technology of China, Hefei, China

Jinming Liu

Ph.D. Candidate

Eastern Institute of Technology & Shanghai Jiao Tong University

Qi Wang

Ph.D. Candidate

Eastern Institute of Technology & Shanghai Jiao Tong University

Yunnan Wang

Ph.D. Candidate

Eastern Institute of Technology & Shanghai Jiao Tong University

Wenyao Zhang

Ph.D. Candidate

Eastern Institute of Technology & Shanghai Jiao Tong University

Yuyang Zhang

Ph.D. Candidate

Eastern Institute of Technology & Shanghai Jiao Tong University

Contact

Xin Jin

jinxin@eitech.edu.cn

Assistant Professor, Eastern Institute of Technology, Ningbo, China

Qiuyu Chen

canghaimeng@sjtu.edu.cn

Ph.D. Candidate, Eastern Institute of Technology, Ningbo, China